Back in 1988, when I was first employed by a company for writing software, the world was fairly simple. The development environment we had was character-based, the database was integrated and traversed with cursors, and we built a whole new administrative system covering everything but the kitchen sink. It took us five years to complete the project, basically because the client kept changing his mind every now and then, and because every change in the code could break code elsewhere in the system. Unit testing hadn’t been invented yet, and testing was done by the end users. In production.

So far for monoliths. Then in 1994 I joined a company that build desktop applications – remember the world wide web was only a couple of years old, and web applications hadn’t been invented yet. We used a great tool called PowerBuilder and now we had two components to worry about; the application on the desktop and the database on the server. The applications we build usually served departments or sometimes even a whole company. Not highly complicated, but not highly scalable either. Ah well, we had fun as long as the client-server paradigm lasted.

Component based development

The world got more complex in the mid-nineties. Companies wanted web applications, basically running on intranets to get rid of desktop deployments. And applications needed to serve multiple departments, and sometimes even break company borders. A new paradigm set in: component based development, also known as CBD. The paradigm promised us re-use, scalability, flexibility and a way to harvest existing code (usually written in COBOL). We started breaking up our systems into big functional chunks, and tried hard to have those components communicate to each other. Java was invented and everybody now wanted to write code in Java (apparently some people still to this nowadays). Components where running in impossible technologies such as application servers and CORBA (to impress your co-workers, look that one up on Wikipedia). The good old days of object request brokers!

Scary stuff, an object request broker architecture

At that time I was working for a large international bank trying to set up an methodology for doing component based development. Even with a heavily armed team of Anderson consultants it took us three years to write the damn thing. In the end both the paradigm and the technology where too complex to write decent and well-performing software. It just never took off.

Service oriented architecture

At that point in time, the early years of this century, I thought we got rid of distributed software development, and happily started building web applications. I guess everyone bravely ignored Martin Fowler’s first law of distributed objects: do not distribute your objects. Gradually we moved into the next paradigm of distributed computing, re-packaging the promises of component based development into a renewed set of technologies. We now started doing business process modeling (BPM), and implemented these processes in enterprise services buses (ESB’s), with components delivering services. We were in the age of service oriented architecture, better known as SOA.

Coming from CBD, SOA seemed easier. As long as your components – the producers – were hooked into the enterprise service bus, we figured out how to build-up scalable and flexible systems. We now had much smaller components that we could actually extract from our existing systems (now not only written in COBOL, but also in PowerBuilder, .NET and Java). The mandatory design patterns books where published and the world was ready to go. This time we would crack it!

An enterprise service bus

I found myself working for an international transport company, and we happily build around SAP middleware – delivering both ESB and BPM tooling. We now not only needed regular Java and .NET developers, but we employed middleware developers and SAP consultants as well. And although agile was introduced to speed up development (I know, this is not the right argument), projects still suffered from sluggishness and moreover, when we got pieces of the puzzle in place, we started to realize that integration testing and deployment of new releases got more complicated by the day.

At last: microservices!

I do hope you forgive me this long and winding introduction to the actual topic: microservices. You might think: why do we need yet another article on microservices? Isn’t there enough literature already on the topic. Well yes there is. But if you look closely to the flood of articles that you find on the internet, most of them only describe the benefits and possibilities of microservices (sing hallelujah), some of them take a look at the few famous path finding examples (Netflix, Amazon, and Netflix, and Amazon, and Netflix…). Only a few articles actually dig a bit deeper, and they usually consist of summing up the technologies people seem to be using when implementing microservices. It’s still early in the game.

That’s were a little historical perspective won’t hurt. I find it interesting to witness that the benefits and possibilities of the predecessors of microservices are still with us. Microservices seem to promise scalable and flexible systems, based on small components that can easily be deployed independently, and thus promote use of the best choice in technologies per component. Basically the same promises we fell for with CBD and SOA in the past. Nothing new here, but that doesn’t mean that microservices aren’t worthwhile investigating.

Is it different this time around?

So why is it different this time? What will make microservices the winning paradigm, where its predecessors clearly were not? What makes it tick? As a developer, there’s no doubt that I am rather enthusiastic about microservices, but I was enthusiastic as well (more or less) when people came up with CBD and SOA.

I do suppose there are differences. For the first time we seem to have the technology in place to build these type of architectures. All the fancy and complex middleware is gone, and we rely solely on very basic and long-time existing web protocols and technologies. Just compare REST to CORBA. Also we seem to understand deployment much better, due to the fact that we’ve learned how to do continuous integration, unit testing, and even continuous delivery. These differences suggest that we can get it to work this time around.

Still, from my historical viewpoint some skepticism is unavoidable. Ten years ago, we also really believed that service oriented architecture would be technologically possible, it would solve all our issues, and we would be able to build stuff faster, reusable and more reliable. So, to be honest, the fact that we believe that the technology is ready, is not much of an argument. Meanwhile the world also got more complex.

Over the last year I’ve been involved with a company that is moving away from their mainframe (too expensive) and a number of older Java monoliths (too big, and hard to maintain). Also time-to-market plays an important role. IT need to support introducing a new product in months, if not in weeks. So we decided to be hip and go microservices. Here’s my recap of the good, the bad and the ugly of microservice architectures, looking back on our first year on the road.

The good

Let’s start with the good parts. We build small components, each offering about two to six services. Good examples of such small components are a PDF component, that does nothing more than generate PDF’s from a template with some data, or a Customer component that allows users to search for existing customers. These components offer the right size. The right size of code, he right size of understandability, to right size to document, to test an to deploy.

Our team tend to evolve towards small teams designing, implementing and supporting individual components. We didn’t enforce ownership, but over time small teams are picking up the work on a specific component and start feeling responsible for it.

When we outlined the basic architecture for our microservices landscape we set a number of guidelines. We are not only building small components, but are also building small single-purpose web applications. Applications can talk to other applications, and can talk to components. Components handle their own persistence and storage, and can also talk to other components. Applications do not talk directly to storage. Components do not talk to each others storage. For us these guidelines work.

Microservices lives up to some of its promises. You can pick the right technology and persistence mechanisms for each of you components. Some components persist to relational databases (DB2 or SQLServer), others persist to document databases (MongoDB in our case). The hipster term here of course is polyglot persistence.

We also stated that every application and component has it’s own domain model. We employ the principles and patterns of domain driven design straightforward. We have domain objects, value objects, aggregates, repositories and factories. Because our components are small, the domain models are fairly uncomplicated, and thus maintainable.

Although we have had quite a journey towards testing our components and services, from Fitnesse to hand-written tests, we are now moving towards testers specifying tests in SoapUI, which we are running both as separate tests and during builds. We had to learn to understand REST, but we’ve got this one figured out for now. And testers love it.

When we started our microservices journey, the team I’m was in a highly reactive mode – tell us what to program and how to program it, and we’ll do our job. I probably won’t have explain what that does with the motivation of the team members. However, with microservices there is no such thing as a ready-to-use cookbook or a predefined architecture. It simply doesn’t exist. That means that we continuous find ourselves solving new pieces of the microservices puzzle, from discovering how to design microservices (we use smart use case), to how implement REST interfaces, how to work with a whole new set of frameworks, and a brand new way of deploying components. It’s this puzzle that make working on this architecture interesting. We allow ourselves to learn everyday.

The bad

But as always, there’s a downside. When you start going down the microservices road – for whatever you may find it beneficial – you need to realize that this is all brand new. Just think of it: if you’re not working for Netflix or Amazon, who do you know who actually already does this already? Who has actively deployed services into production, with a full load? Who can you ask?

The bad

You need to be aware that you really need to dig in and get your hands dirty. You will have to do a lot of research yourself. There are no standards yet. You will realize that any choices you now make in techniques, protocols, frameworks, and tools is probably temporarily. When you are learning on a daily basis, newer and better options become available or necessary, and your choices will alter. So if you’re looking for a ready-made IKEA construction kit for implementing microservices the right way, you might want to stay away from microservices for the next five to seven years. Just wait for the big vendors, they will jump in soon enough, as there’s money to make.

From a design perspective you will have to start to think differently. Designing small components is not as easy as it appears. What makes a good size component? Yes, it has a single business purpose, undoubtedly, but how do you define the boundaries of your component? When do you decide to split a working, operational component into two or more separate components? We’ve come across a number of these challenges over the past year. Although we have split up existing components, there are no hard rules on when to actually do this. We decided mainly based on gut feeling, usually when we didn’t understand a components structure anymore, or when we realized it was implementing multiple business functions. It gets even harder when you are chipping off components from large systems. There’s usually a lot of wiring to cut, and in the meantime you will need to guarantee that the system doesn’t break. Also, you will need a fair amount of domain knowledge to componentize your existing systems.

Basically we found that components are not as stable as we first thought. Occasionally we merge components, but it appeared far more common to break components into smaller ones to provide reuse and shorter time-to-market. As an example, we pulled out a Q&A component from our Product component that now supplies questionnaires for other purposes than just about products. And more recently we created a new component that only deals with validating and storing filled-in questionnaires.

There’s lots of technical questions you will need to answer too. What’s the architecture of a component? Is an application a component as well? Or, a less visible one, if you are thinking of using REST as your communication protocol – and you probably are – is: how does REST actually work? What does it mean that a service interface is RESTful? What return codes do you use when? How do we implement error handling in consumers if something isn’t handled as intended by one of our services? REST is not as easy as it appears. You will need to invest a lot of time and effort to find out your preferred way of dealing with your service interfaces. We figured out that to make sure that services are more or less called in a uniform way, we’d better create a small framework that does the requests, and also that deals with responses and errors. This way communication is handled similar with every request, and if we need to change the underlying protocol or framework (JAX-RS in our case), we only need to change it in one location.

That automatically brings me to the next issue. Yes, microservices deliver on the promise that you can find the best technology for every components. We recognize that in our projects. Some of our components are using Hibernate to persist, some are using a MongoDB connector, some rely on additional frameworks, such as Dozer for mapping stuff, or use some PDF-generating framework. But with additional frameworks comes the need for additional knowledge. At this company, we will easily grow to over a hundred small components and maybe even more. If even only a quarter of these use some specific framework, we will end up with twenty-five to thirty different frameworks( did I mention we do Java?). We will need to know about all of these. And even worse, all of these frameworks (unless they’re dead) version too.

Then there’s the need to standardize some of the code you are writing. Freedom of technology is all good, but if every component is literally implemented differently, you will end up with an almost unmaintainable code base, especially since it is likely that no-one oversees all the code that is being written. I strongly suggest to make sure there’s coherence over your components on aspects of your code base that you could and probably should unify. Think of your UI components (grids, buttons, pop-ups, error boxes), validation (of domain models), talking to databases or how responses from services are formulated. Also, although I strongly oppose having a shared domain model (please don’t go that route), there’s elements in your domain models you might need to share. We share a number of enumerations and value objects for instance, such as CustomerId or IBAN.

If you’re a bit like us this generic code ends up in a set of libraries – a framework if you will – that is reused by your components. We have learned that with every new release of this home-grown framework we end up refactoring some of the code of our components. I rewrote the interface of our validation framework last week, which was necessary to get rid of some state it kept – components need to be stateless for clear reasons of scalability – and I’m a bit reluctant to merge it back into the trunk when I get back to work after the weekend. Most of our components use it, and their code might not compile. I guess what I’m saying here is that it’s good to have a home-grown framework. With some discipline it will help keep your code somewhat cleaner and a tad more uniform, but you will have to reason about committing to it and releasing new versions of it.

The ugly

So what about the really nasty parts of microservices? Let’s start with deployment pipelines. One of the promises of microservices is that they can and should be individually deployed and released. If you are currently used to having a single build and deployment pipeline for the one system you are building or extending, you might be in for a treat. With microservices you are creating individual pipelines for individual components.

The ugly

Releasing the first version of a component is not that hard. We started with a simple Jenkins pipeline, but are currently investigating TeamCity. We have four different environments. One for development, one for testing, one for acceptance and of course the production environment. Now we are slowly getting in the process of releasing our components, most of them with their own database, we start to realize that we can not do this without the support and collaboration of the operations team. Our expectations are that we will slowly evolve into a continuous delivery mode, with operations incorporated in the team. Right now we already have enough trouble getting operations on-board. They are now used to quarterly releases of the whole landscape, and certainly have no wish for individually deploying components.

Another that worries me is versioning. If it is already hard enough to version a few collaborating applications, what about a hundred applications and components each relying on a bunch of other ones for delivering the required services? Of course, your services are all independent, as the microservices paradigm promises. But it’s only together that your services become the system. How do you avoid to turn your pretty microservices architecture into versioning hell – which seems a direct descendant of DLL hell?

To be honest, at this point in time, I couldn’t advice you well on this topic, but a fair warning is probably wise. We started out with a simple three digit numbering scheme (such as 1.2.5). The rightmost number changes when minor bugs have been solved. The number in the middle raises when minor additional functionality has been added to a component, and the leftmost number changes when we deploy a new version of a component with breaking changes to its interface. Not that we strongly promote regularly changing interfaces, but it does happen.

Next to that, we test our services during the build, both using coded tests and tests we assemble in SoapUI. And we document the requirements in smart use cases and domain models of our applications and components using UML. I’m quite sure in the future we need to take more precautions to keep our landscape sane, such as adding Swagger to document our coded services, but it’s still to early in the game to tell.

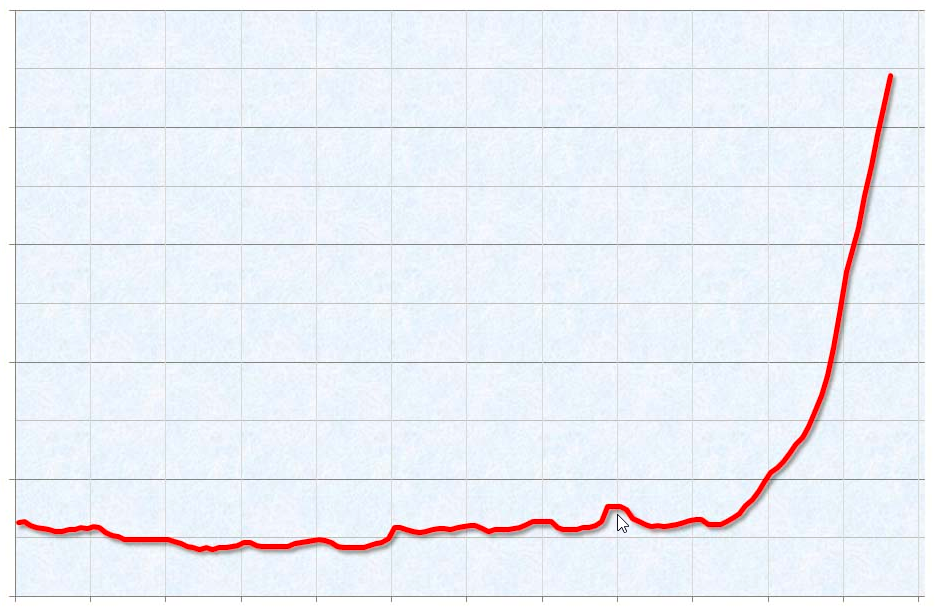

The hockey stick model

Early in the game? Yes, we’ve only been on the road to microservices for about a year. And I still haven’t figured out whether we are on a stairway to heaven or on a highway to hell. I do suppose, as with it’s historical predecessors, we will end up somewhere in the middle, although I really do believe that we do have the technology to get this paradigm working – and with we I don’t just mean Netflix, Amazon or some hipster mobile company, but the regular mid-size companies you and I work for.

But will it be worthwhile? Shorten time-to-market? Deliver all the goodies the paradigm promises us? To be honest, I don’t know yet – despite or maybe even because of all the hype surrounding microservices. What I did notice is that, given the complexity of everything surrounding microservices, it takes quite a while before you get your first services up and running, and we are only just passing this point. Some weeks ago, I was having a beer with Sam Newman, author of Building Microservices. Sam confirmed my observations from his own examples and referred to this pattern as the hockey stick model.

There’s a lot to take care of before you are ready to release you first service. Think of infrastructure, sorting out how to do REST properly, setting up you deployment pipelines, and foremost change the way you think about developing software. But as soon as the first service is up and running, more and more follow faster and faster.

Be patient

So, if there’s one thing I’ve learned over the past year, it is to be patient – a word which definitively did not appear in my dictionary yet. Don’t try to enforce all kinds of (company) standards if they just don’t exist yet. Figure it out on the fly. Allow yourself to learn. Take it step by step. Simply try to do stuff a little bit better than the day before. And as always, have fun!

This article will be published in SDN Magazine, February 2015.

4 thoughts on “Microservices. The good, the bad and the ugly”

Very interesting article and well written. For someone new to microservices, this post was extremely informative and provided a thorough analysis on the different aspects of the pros and cons of this architecture. Thanks!

Hi Christos, thanks for the warm words

Loved every bit over this article. I’m also contemplating using microservices but i just get confused as to why should i do so. Even though this article doesn’t preach that you should use it, it helps in making the decision easier for me if i should or not.

Comments are closed.